🧠 Emotion Recognition Model – VGG19 (Fine-Tuned on CK+ and RAF-DB)

📘 Overview

This repository provides a fine-tuned VGG19-based Emotion Recognition model trained using a combination of CK+ and RAF-DB datasets. The model is designed to classify human facial emotions into seven categories and has been optimized for both performance and size (TensorFlow and TensorFlow Lite versions available).

The model is a key module in a broader AI system for emotion-aware human-computer interaction, providing robust real-time emotion inference.

🧩 Model Architecture

The model is based on VGG19 pre-trained on ImageNet, and fine-tuned in two stages:

Stage 1 – Frozen Base Training (10 Epochs):

- The convolutional base (VGG19) was frozen.

- Only newly added dense layers were trained.

- Purpose: Train classifier layers without disrupting pre-trained features.

Stage 2 – Unfrozen Base Fine-Tuning (30 Epochs):

- The base model was unfrozen and fine-tuned with a low learning rate.

- Purpose: Enhance generalization and feature learning for emotion-specific characteristics.

📊 Datasets

Two publicly available datasets were used for training and evaluation:

Dataset Preparation

- Combined both datasets for richer emotion diversity.

- Applied class balancing through image augmentation (rotation, flips, brightness, zoom, and shift).

- Final dataset distribution was uniform across emotion classes.

⚙️ Training Configuration

| Parameter | Description |

|---|---|

| Base Model | VGG19 (Pre-trained on ImageNet) |

| Optimizer | Adam |

| Learning Rate | 1e-4 (unfrozen phase) |

| Loss Function | Sparse Categorical Crossentropy |

| Batch Size | 32 |

| Epochs | 40 (10 + 30) |

| Image Size | 224x224 |

📈 Performance Summary

| Metric | Training | Validation | Testing |

|---|---|---|---|

| Accuracy | 97.42% | 81.26% | 77.51% |

| Loss | 0.0910 | 1.0053 | 1.4182 |

Classification Report

| Class | Precision | Recall | F1-Score |

|---|---|---|---|

| 0 | 0.72 | 0.65 | 0.68 |

| 1 | 0.39 | 0.46 | 0.42 |

| 2 | 0.46 | 0.51 | 0.49 |

| 3 | 0.95 | 0.84 | 0.89 |

| 4 | 0.67 | 0.89 | 0.76 |

| 5 | 0.79 | 0.67 | 0.72 |

| 6 | 0.83 | 0.74 | 0.78 |

Overall Accuracy: 77.51%

Weighted F1-Score: 0.78

🖼️ Visualizations

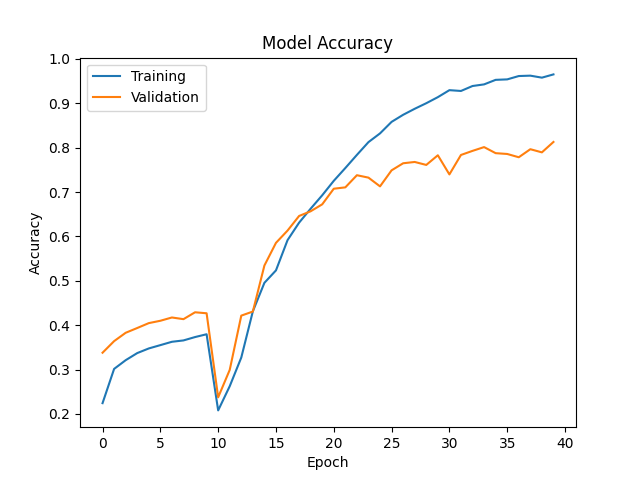

1. Training Accuracy and Loss

Graph 1: Training and Validation Accuracy vs Epochs

Training and validation accuracy over 40 epochs.

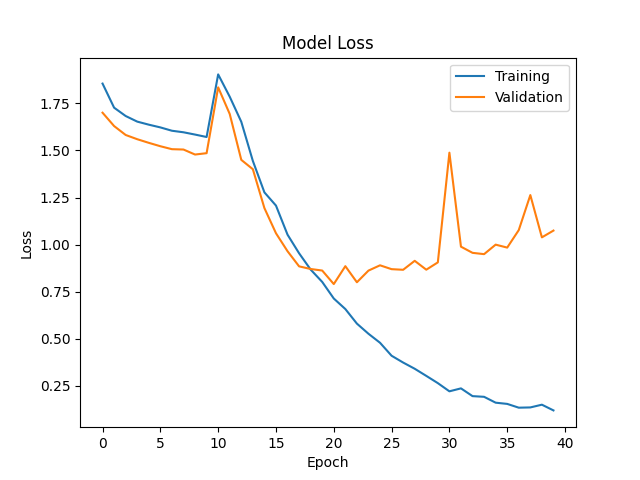

Training and validation accuracy over 40 epochs.Graph 2: Training and Validation Loss vs Epochs

Training and validation loss over 40 epochs.

Training and validation loss over 40 epochs.

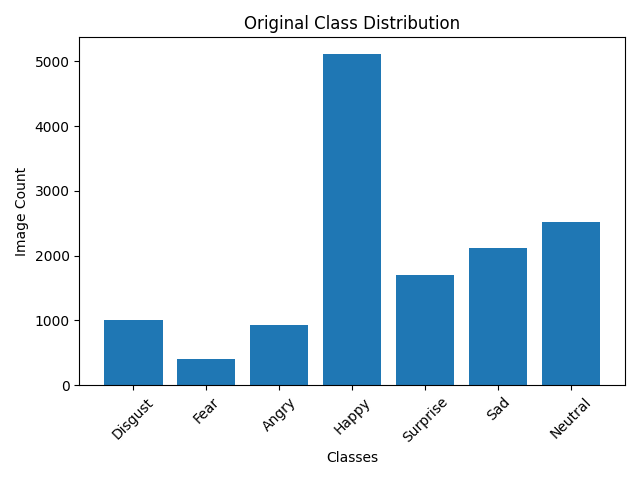

2. Dataset Distributions

Graph 3: Original Dataset Class Distribution

Class distribution of the original combined dataset.

Class distribution of the original combined dataset.Graph 4: Balanced (Augmented) Dataset Class Distribution

Class distribution after augmentation and balancing.

Class distribution after augmentation and balancing.

3. Evaluation Visuals

Graph 5: Multi-Class ROC Curves (AUC per class)

Multi-class ROC curves with AUC values.

Multi-class ROC curves with AUC values.Graph 6: Confusion Matrix (Heatmap)

Confusion matrix heatmap on test set.

Confusion matrix heatmap on test set.Graph 7: Sample Test Results (Subplots of 5 predictions per class)

Sample predictions (5 images per class) showing model performance.

Sample predictions (5 images per class) showing model performance.

These visualizations clearly demonstrate model learning stability, class balance, and classification performance across emotions.

🧩 Model Files

| File | Description |

|---|---|

emotion_vgg19_model.h5 |

Original fine-tuned TensorFlow model (≈230 MB) |

emotion_vgg19_optimized.tflite |

Optimized TensorFlow Lite model (≈19.2 MB) for mobile/edge devices |

🧰 Inference Example

import tensorflow as tf

from tensorflow.keras.preprocessing import image

import numpy as np

# Load original model

model_path = 'emotion_vgg19_model.h5'

model = tf.keras.models.load_model(model_path)

# Prepare input

img = image.load_img('test_face.jpg', target_size=(224, 224))

input_data = np.expand_dims(image.img_to_array(img) / 255.0, axis=0)

# Run inference with original model

pred = model.predict(input_data)

classes = ['Angry', 'Disgust', 'Fear', 'Happy', 'Neutral', 'Sad', 'Surprise']

print("Original Model Prediction:", classes[np.argmax(pred)])

# Load TFLite optimized model

tflite_model_path = 'emotion_vgg19_optimized.tflite'

interpreter = tf.lite.Interpreter(model_path=tflite_model_path)

interpreter.allocate_tensors()

input_index = interpreter.get_input_details()[0]['index']

output_index = interpreter.get_output_details()[0]['index']

interpreter.set_tensor(input_index, input_data.astype(np.float32))

interpreter.invoke()

output = interpreter.get_tensor(output_index)

print("TFLite Model Prediction:", classes[np.argmax(output)])

🚀 Key Features

- Dual-dataset fine-tuning (CK+ + RAF-DB)

- Balanced training set with augmentation

- Strong generalization (77.5% test accuracy)

- Mobile-optimized TFLite version (19.2 MB)

- Suitable for real-time emotion-aware applications

🏷️ Tags

emotion-recognition vgg19 facial-expression deep-learning tensorflow tflite ckplus rafdb computer-vision affective-computing multimodal-ai fine-tuning

📄 Citation

@misc{pasindu_sewmuthu_abewickrama_singhe_2025,

author = { Pasindu Sewmuthu Abewickrama Singhe },

title = { vgg19-emotion-recognition-ckplus-rafdb (Revision dad246e) },

year = 2025,

url = { https://huggingface.co/PSewmuthu/vgg19-emotion-recognition-ckplus-rafdb },

doi = { 10.57967/hf/6651 },

publisher = { Hugging Face }

}

👤 Author & Model Info

Author: P.S. Abewickrama Singhe

Developed with: TensorFlow + Keras

License: Apache-2.0

Date: October 2025

Email: [email protected]

- Downloads last month

- 7