Update README.md

Browse files

README.md

CHANGED

|

@@ -1,52 +1,87 @@

|

|

| 1 |

---

|

| 2 |

library_name: transformers

|

| 3 |

license: apache-2.0

|

| 4 |

-

license_link: https://huggingface.co/Qwen/Qwen3-

|

| 5 |

pipeline_tag: text-generation

|

| 6 |

-

base_model:

|

| 7 |

-

- Qwen/Qwen3-Coder-30B-A3B-Instruct

|

| 8 |

---

|

| 9 |

-

# Qwen3-Coder-30B-A3B-Instruct-AWQ-8bit

|

| 10 |

|

| 11 |

-

|

| 12 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 13 |

|

| 14 |

## Inference

|

| 15 |

-

|

| 16 |

-

|

|

|

|

|

|

|

| 17 |

pip install -U vllm

|

| 18 |

```

|

| 19 |

|

| 20 |

-

|

| 21 |

-

|

| 22 |

-

|

| 23 |

-

|

| 24 |

-

--tensor-parallel-size 4 \

|

| 25 |

-

--enable-auto-tool-choice \

|

| 26 |

-

--tool-call-parser hermes

|

| 27 |

```

|

| 28 |

|

| 29 |

-

|

| 30 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 31 |

<img alt="Chat" src="https://img.shields.io/badge/%F0%9F%92%9C%EF%B8%8F%20Qwen%20Chat%20-536af5" style="display: inline-block; vertical-align: middle;"/>

|

| 32 |

</a>

|

| 33 |

|

| 34 |

## Highlights

|

| 35 |

|

| 36 |

-

|

| 37 |

|

| 38 |

-

- **Significant

|

| 39 |

-

- **

|

| 40 |

-

- **

|

|

|

|

| 41 |

|

| 42 |

-

: 32 for Q and 4 for KV

|

| 52 |

- Number of Experts: 128

|

|

@@ -55,12 +90,53 @@ vllm serve cpatonn/Qwen3-Coder-30B-A3B-Instruct-AWQ-8bit \

|

|

| 55 |

|

| 56 |

**NOTE: This model supports only non-thinking mode and does not generate ``<think></think>`` blocks in its output. Meanwhile, specifying `enable_thinking=False` is no longer required.**

|

| 57 |

|

| 58 |

-

For more details, including benchmark evaluation, hardware requirements, and inference performance, please refer to our [blog](https://qwenlm.github.io/blog/qwen3

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 59 |

|

| 60 |

|

| 61 |

## Quickstart

|

| 62 |

|

| 63 |

-

|

| 64 |

|

| 65 |

With `transformers<4.51.0`, you will encounter the following error:

|

| 66 |

```

|

|

@@ -71,7 +147,7 @@ The following contains a code snippet illustrating how to use the model generate

|

|

| 71 |

```python

|

| 72 |

from transformers import AutoModelForCausalLM, AutoTokenizer

|

| 73 |

|

| 74 |

-

model_name = "Qwen/Qwen3-

|

| 75 |

|

| 76 |

# load the tokenizer and the model

|

| 77 |

tokenizer = AutoTokenizer.from_pretrained(model_name)

|

|

@@ -82,7 +158,7 @@ model = AutoModelForCausalLM.from_pretrained(

|

|

| 82 |

)

|

| 83 |

|

| 84 |

# prepare the model input

|

| 85 |

-

prompt = "

|

| 86 |

messages = [

|

| 87 |

{"role": "user", "content": prompt}

|

| 88 |

]

|

|

@@ -96,7 +172,7 @@ model_inputs = tokenizer([text], return_tensors="pt").to(model.device)

|

|

| 96 |

# conduct text completion

|

| 97 |

generated_ids = model.generate(

|

| 98 |

**model_inputs,

|

| 99 |

-

max_new_tokens=

|

| 100 |

)

|

| 101 |

output_ids = generated_ids[0][len(model_inputs.input_ids[0]):].tolist()

|

| 102 |

|

|

@@ -105,59 +181,61 @@ content = tokenizer.decode(output_ids, skip_special_tokens=True)

|

|

| 105 |

print("content:", content)

|

| 106 |

```

|

| 107 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 108 |

**Note: If you encounter out-of-memory (OOM) issues, consider reducing the context length to a shorter value, such as `32,768`.**

|

| 109 |

|

| 110 |

For local use, applications such as Ollama, LMStudio, MLX-LM, llama.cpp, and KTransformers have also supported Qwen3.

|

| 111 |

|

| 112 |

-

## Agentic

|

| 113 |

|

| 114 |

-

Qwen3

|

| 115 |

|

| 116 |

-

|

| 117 |

```python

|

| 118 |

-

|

| 119 |

-

|

| 120 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 121 |

|

| 122 |

# Define Tools

|

| 123 |

-

tools=[

|

| 124 |

-

{

|

| 125 |

-

|

| 126 |

-

|

| 127 |

-

|

| 128 |

-

|

| 129 |

-

"

|

| 130 |

-

"

|

| 131 |

-

"

|

| 132 |

-

"properties": {

|

| 133 |

-

'input_num': {

|

| 134 |

-

'type': 'number',

|

| 135 |

-

'description': 'input_num is a number that will be squared'

|

| 136 |

-

}

|

| 137 |

-

},

|

| 138 |

}

|

| 139 |

}

|

| 140 |

-

}

|

|

|

|

| 141 |

]

|

| 142 |

|

| 143 |

-

|

| 144 |

-

|

| 145 |

-

client = OpenAI(

|

| 146 |

-

# Use a custom endpoint compatible with OpenAI API

|

| 147 |

-

base_url='http://localhost:8000/v1', # api_base

|

| 148 |

-

api_key="EMPTY"

|

| 149 |

-

)

|

| 150 |

-

|

| 151 |

-

messages = [{'role': 'user', 'content': 'square the number 1024'}]

|

| 152 |

-

|

| 153 |

-

completion = client.chat.completions.create(

|

| 154 |

-

messages=messages,

|

| 155 |

-

model="Qwen3-Coder-30B-A3B-Instruct",

|

| 156 |

-

max_tokens=65536,

|

| 157 |

-

tools=tools,

|

| 158 |

-

)

|

| 159 |

|

| 160 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

| 161 |

```

|

| 162 |

|

| 163 |

## Best Practices

|

|

@@ -165,10 +243,14 @@ print(completion.choice[0])

|

|

| 165 |

To achieve optimal performance, we recommend the following settings:

|

| 166 |

|

| 167 |

1. **Sampling Parameters**:

|

| 168 |

-

- We suggest using `

|

|

|

|

| 169 |

|

| 170 |

-

2. **Adequate Output Length**: We recommend using an output length of

|

| 171 |

|

|

|

|

|

|

|

|

|

|

| 172 |

|

| 173 |

### Citation

|

| 174 |

|

|

|

|

| 1 |

---

|

| 2 |

library_name: transformers

|

| 3 |

license: apache-2.0

|

| 4 |

+

license_link: https://huggingface.co/Qwen/Qwen3-30B-A3B-Instruct-2507/blob/main/LICENSE

|

| 5 |

pipeline_tag: text-generation

|

| 6 |

+

base_model: Qwen/Qwen3-30B-A3B-Instruct-2507

|

|

|

|

| 7 |

---

|

|

|

|

| 8 |

|

| 9 |

+

# Qwen3-30B-A3B-Instruct-2507 AWQ - INT8

|

| 10 |

+

|

| 11 |

+

## Model Details

|

| 12 |

+

|

| 13 |

+

### Quantization Details

|

| 14 |

+

|

| 15 |

+

- **Quantization Method:** cyankiwi AWQ v1.0

|

| 16 |

+

- **Bits:** 8

|

| 17 |

+

- **Group Size:** 32

|

| 18 |

+

- **Calibration Dataset:** [nvidia/Llama-Nemotron-Post-Training-Dataset](https://huggingface.co/datasets/nvidia/Llama-Nemotron-Post-Training-Dataset)

|

| 19 |

+

- **Quantization Tool:** [llm-compressor](https://github.com/vllm-project/llm-compressor)

|

| 20 |

+

|

| 21 |

+

### Memory Usage

|

| 22 |

+

|

| 23 |

+

| **Type** | **Qwen3-30B-A3B-Instruct-2507** | **Qwen3-30B-A3B-Instruct-2507-AWQ-8bit** |

|

| 24 |

+

|:---------------:|:----------------:|:----------------:|

|

| 25 |

+

| **Memory Size** | 56.9 GB | 30.8 GB |

|

| 26 |

+

| **KV Cache per Token** | 48.0 kB | 24.0 kB |

|

| 27 |

+

| **KV Cache per Context** | 12.0 GB | 6.0 GB |

|

| 28 |

+

|

| 29 |

+

### Evaluations

|

| 30 |

+

|

| 31 |

+

| **Benchmarks** | **Qwen3-30B-A3B-Instruct-2507** | **Qwen3-30B-A3B-Instruct-2507-AWQ-8bit** |

|

| 32 |

+

|:---------------:|:----------------:|:----------------:|

|

| 33 |

+

| **Perplexity** | 1.61607 | 1.61609 |

|

| 34 |

+

|

| 35 |

+

- **Evaluation Context Length:** 16384

|

| 36 |

|

| 37 |

## Inference

|

| 38 |

+

|

| 39 |

+

### Prerequisite

|

| 40 |

+

|

| 41 |

+

```bash

|

| 42 |

pip install -U vllm

|

| 43 |

```

|

| 44 |

|

| 45 |

+

### Basic Usage

|

| 46 |

+

|

| 47 |

+

```bash

|

| 48 |

+

vllm serve cyankiwi/Qwen3-30B-A3B-Instruct-2507-AWQ-8bit --max-model-len 262144

|

|

|

|

|

|

|

|

|

|

| 49 |

```

|

| 50 |

|

| 51 |

+

## Additional Information

|

| 52 |

+

|

| 53 |

+

### Changelog

|

| 54 |

+

|

| 55 |

+

- **v1.0.0** - cyankiwi AWQ v1.0 release

|

| 56 |

+

|

| 57 |

+

### Authors

|

| 58 |

+

|

| 59 |

+

- **Name:** Ton Cao

|

| 60 |

+

- **Contacts:** [email protected]

|

| 61 |

+

|

| 62 |

+

# Qwen3-30B-A3B-Instruct-2507

|

| 63 |

+

<a href="https://chat.qwen.ai/?model=Qwen3-30B-A3B-2507" target="_blank" style="margin: 2px;">

|

| 64 |

<img alt="Chat" src="https://img.shields.io/badge/%F0%9F%92%9C%EF%B8%8F%20Qwen%20Chat%20-536af5" style="display: inline-block; vertical-align: middle;"/>

|

| 65 |

</a>

|

| 66 |

|

| 67 |

## Highlights

|

| 68 |

|

| 69 |

+

We introduce the updated version of the **Qwen3-30B-A3B non-thinking mode**, named **Qwen3-30B-A3B-Instruct-2507**, featuring the following key enhancements:

|

| 70 |

|

| 71 |

+

- **Significant improvements** in general capabilities, including **instruction following, logical reasoning, text comprehension, mathematics, science, coding and tool usage**.

|

| 72 |

+

- **Substantial gains** in long-tail knowledge coverage across **multiple languages**.

|

| 73 |

+

- **Markedly better alignment** with user preferences in **subjective and open-ended tasks**, enabling more helpful responses and higher-quality text generation.

|

| 74 |

+

- **Enhanced capabilities** in **256K long-context understanding**.

|

| 75 |

|

| 76 |

+

|

| 77 |

|

| 78 |

## Model Overview

|

| 79 |

|

| 80 |

+

**Qwen3-30B-A3B-Instruct-2507** has the following features:

|

| 81 |

- Type: Causal Language Models

|

| 82 |

- Training Stage: Pretraining & Post-training

|

| 83 |

- Number of Parameters: 30.5B in total and 3.3B activated

|

| 84 |

+

- Number of Paramaters (Non-Embedding): 29.9B

|

| 85 |

- Number of Layers: 48

|

| 86 |

- Number of Attention Heads (GQA): 32 for Q and 4 for KV

|

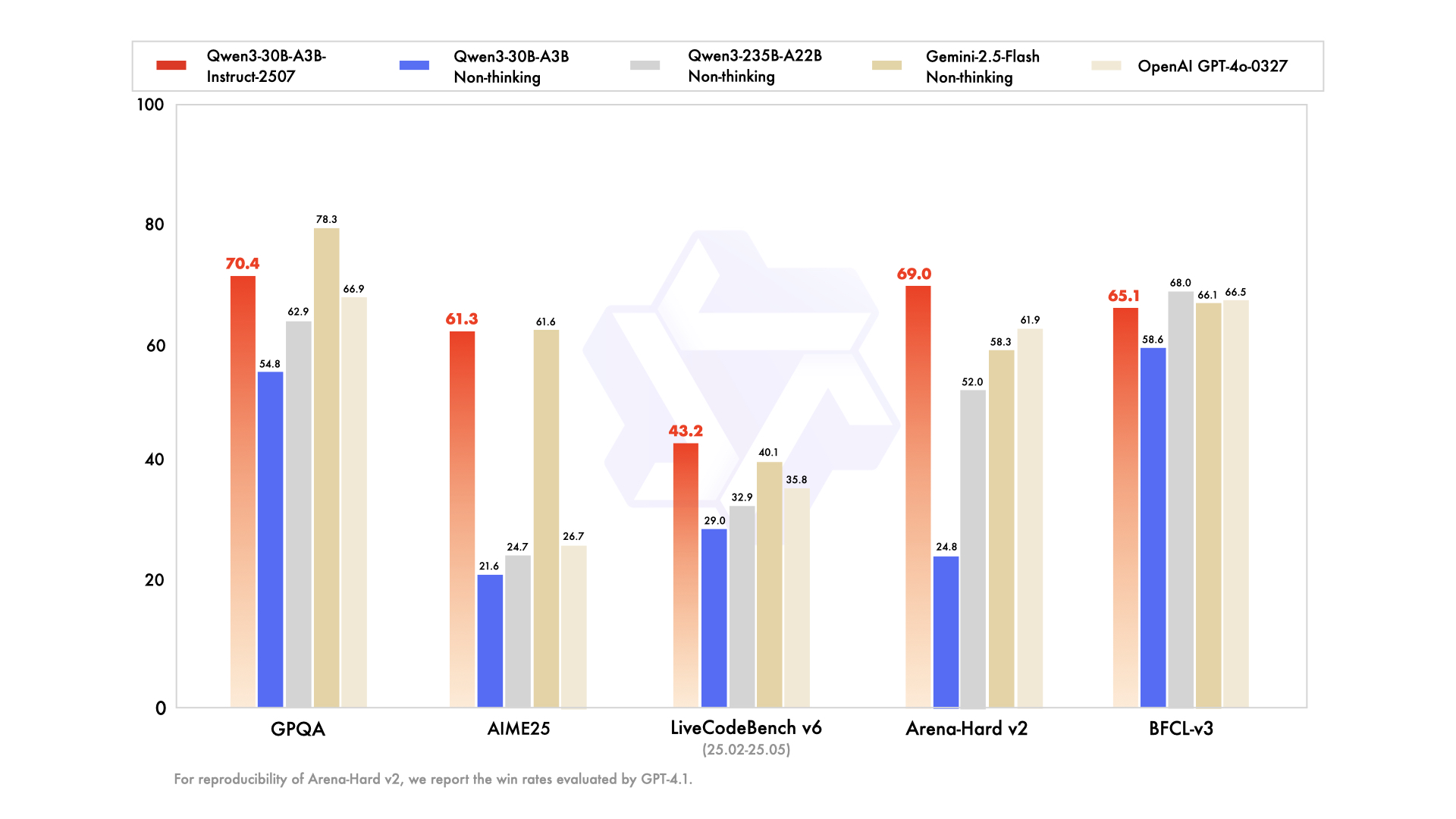

| 87 |

- Number of Experts: 128

|

|

|

|

| 90 |

|

| 91 |

**NOTE: This model supports only non-thinking mode and does not generate ``<think></think>`` blocks in its output. Meanwhile, specifying `enable_thinking=False` is no longer required.**

|

| 92 |

|

| 93 |

+

For more details, including benchmark evaluation, hardware requirements, and inference performance, please refer to our [blog](https://qwenlm.github.io/blog/qwen3/), [GitHub](https://github.com/QwenLM/Qwen3), and [Documentation](https://qwen.readthedocs.io/en/latest/).

|

| 94 |

+

|

| 95 |

+

|

| 96 |

+

## Performance

|

| 97 |

+

|

| 98 |

+

| | Deepseek-V3-0324 | GPT-4o-0327 | Gemini-2.5-Flash Non-Thinking | Qwen3-235B-A22B Non-Thinking | Qwen3-30B-A3B Non-Thinking | Qwen3-30B-A3B-Instruct-2507 |

|

| 99 |

+

|--- | --- | --- | --- | --- | --- | --- |

|

| 100 |

+

| **Knowledge** | | | | | | |

|

| 101 |

+

| MMLU-Pro | **81.2** | 79.8 | 81.1 | 75.2 | 69.1 | 78.4 |

|

| 102 |

+

| MMLU-Redux | 90.4 | **91.3** | 90.6 | 89.2 | 84.1 | 89.3 |

|

| 103 |

+

| GPQA | 68.4 | 66.9 | **78.3** | 62.9 | 54.8 | 70.4 |

|

| 104 |

+

| SuperGPQA | **57.3** | 51.0 | 54.6 | 48.2 | 42.2 | 53.4 |

|

| 105 |

+

| **Reasoning** | | | | | | |

|

| 106 |

+

| AIME25 | 46.6 | 26.7 | **61.6** | 24.7 | 21.6 | 61.3 |

|

| 107 |

+

| HMMT25 | 27.5 | 7.9 | **45.8** | 10.0 | 12.0 | 43.0 |

|

| 108 |

+

| ZebraLogic | 83.4 | 52.6 | 57.9 | 37.7 | 33.2 | **90.0** |

|

| 109 |

+

| LiveBench 20241125 | 66.9 | 63.7 | **69.1** | 62.5 | 59.4 | 69.0 |

|

| 110 |

+

| **Coding** | | | | | | |

|

| 111 |

+

| LiveCodeBench v6 (25.02-25.05) | **45.2** | 35.8 | 40.1 | 32.9 | 29.0 | 43.2 |

|

| 112 |

+

| MultiPL-E | 82.2 | 82.7 | 77.7 | 79.3 | 74.6 | **83.8** |

|

| 113 |

+

| Aider-Polyglot | 55.1 | 45.3 | 44.0 | **59.6** | 24.4 | 35.6 |

|

| 114 |

+

| **Alignment** | | | | | | |

|

| 115 |

+

| IFEval | 82.3 | 83.9 | 84.3 | 83.2 | 83.7 | **84.7** |

|

| 116 |

+

| Arena-Hard v2* | 45.6 | 61.9 | 58.3 | 52.0 | 24.8 | **69.0** |

|

| 117 |

+

| Creative Writing v3 | 81.6 | 84.9 | 84.6 | 80.4 | 68.1 | **86.0** |

|

| 118 |

+

| WritingBench | 74.5 | 75.5 | 80.5 | 77.0 | 72.2 | **85.5** |

|

| 119 |

+

| **Agent** | | | | | | |

|

| 120 |

+

| BFCL-v3 | 64.7 | 66.5 | 66.1 | **68.0** | 58.6 | 65.1 |

|

| 121 |

+

| TAU1-Retail | 49.6 | 60.3# | **65.2** | 65.2 | 38.3 | 59.1 |

|

| 122 |

+

| TAU1-Airline | 32.0 | 42.8# | **48.0** | 32.0 | 18.0 | 40.0 |

|

| 123 |

+

| TAU2-Retail | **71.1** | 66.7# | 64.3 | 64.9 | 31.6 | 57.0 |

|

| 124 |

+

| TAU2-Airline | 36.0 | 42.0# | **42.5** | 36.0 | 18.0 | 38.0 |

|

| 125 |

+

| TAU2-Telecom | **34.0** | 29.8# | 16.9 | 24.6 | 18.4 | 12.3 |

|

| 126 |

+

| **Multilingualism** | | | | | | |

|

| 127 |

+

| MultiIF | 66.5 | 70.4 | 69.4 | 70.2 | **70.8** | 67.9 |

|

| 128 |

+

| MMLU-ProX | 75.8 | 76.2 | **78.3** | 73.2 | 65.1 | 72.0 |

|

| 129 |

+

| INCLUDE | 80.1 | 82.1 | **83.8** | 75.6 | 67.8 | 71.9 |

|

| 130 |

+

| PolyMATH | 32.2 | 25.5 | 41.9 | 27.0 | 23.3 | **43.1** |

|

| 131 |

+

|

| 132 |

+

*: For reproducibility, we report the win rates evaluated by GPT-4.1.

|

| 133 |

+

|

| 134 |

+

\#: Results were generated using GPT-4o-20241120, as access to the native function calling API of GPT-4o-0327 was unavailable.

|

| 135 |

|

| 136 |

|

| 137 |

## Quickstart

|

| 138 |

|

| 139 |

+

The code of Qwen3-MoE has been in the latest Hugging Face `transformers` and we advise you to use the latest version of `transformers`.

|

| 140 |

|

| 141 |

With `transformers<4.51.0`, you will encounter the following error:

|

| 142 |

```

|

|

|

|

| 147 |

```python

|

| 148 |

from transformers import AutoModelForCausalLM, AutoTokenizer

|

| 149 |

|

| 150 |

+

model_name = "Qwen/Qwen3-30B-A3B-Instruct-2507"

|

| 151 |

|

| 152 |

# load the tokenizer and the model

|

| 153 |

tokenizer = AutoTokenizer.from_pretrained(model_name)

|

|

|

|

| 158 |

)

|

| 159 |

|

| 160 |

# prepare the model input

|

| 161 |

+

prompt = "Give me a short introduction to large language model."

|

| 162 |

messages = [

|

| 163 |

{"role": "user", "content": prompt}

|

| 164 |

]

|

|

|

|

| 172 |

# conduct text completion

|

| 173 |

generated_ids = model.generate(

|

| 174 |

**model_inputs,

|

| 175 |

+

max_new_tokens=16384

|

| 176 |

)

|

| 177 |

output_ids = generated_ids[0][len(model_inputs.input_ids[0]):].tolist()

|

| 178 |

|

|

|

|

| 181 |

print("content:", content)

|

| 182 |

```

|

| 183 |

|

| 184 |

+

For deployment, you can use `sglang>=0.4.6.post1` or `vllm>=0.8.5` or to create an OpenAI-compatible API endpoint:

|

| 185 |

+

- SGLang:

|

| 186 |

+

```shell

|

| 187 |

+

python -m sglang.launch_server --model-path Qwen/Qwen3-30B-A3B-Instruct-2507 --context-length 262144

|

| 188 |

+

```

|

| 189 |

+

- vLLM:

|

| 190 |

+

```shell

|

| 191 |

+

vllm serve Qwen/Qwen3-30B-A3B-Instruct-2507 --max-model-len 262144

|

| 192 |

+

```

|

| 193 |

+

|

| 194 |

**Note: If you encounter out-of-memory (OOM) issues, consider reducing the context length to a shorter value, such as `32,768`.**

|

| 195 |

|

| 196 |

For local use, applications such as Ollama, LMStudio, MLX-LM, llama.cpp, and KTransformers have also supported Qwen3.

|

| 197 |

|

| 198 |

+

## Agentic Use

|

| 199 |

|

| 200 |

+

Qwen3 excels in tool calling capabilities. We recommend using [Qwen-Agent](https://github.com/QwenLM/Qwen-Agent) to make the best use of agentic ability of Qwen3. Qwen-Agent encapsulates tool-calling templates and tool-calling parsers internally, greatly reducing coding complexity.

|

| 201 |

|

| 202 |

+

To define the available tools, you can use the MCP configuration file, use the integrated tool of Qwen-Agent, or integrate other tools by yourself.

|

| 203 |

```python

|

| 204 |

+

from qwen_agent.agents import Assistant

|

| 205 |

+

|

| 206 |

+

# Define LLM

|

| 207 |

+

llm_cfg = {

|

| 208 |

+

'model': 'Qwen3-30B-A3B-Instruct-2507',

|

| 209 |

+

|

| 210 |

+

# Use a custom endpoint compatible with OpenAI API:

|

| 211 |

+

'model_server': 'http://localhost:8000/v1', # api_base

|

| 212 |

+

'api_key': 'EMPTY',

|

| 213 |

+

}

|

| 214 |

|

| 215 |

# Define Tools

|

| 216 |

+

tools = [

|

| 217 |

+

{'mcpServers': { # You can specify the MCP configuration file

|

| 218 |

+

'time': {

|

| 219 |

+

'command': 'uvx',

|

| 220 |

+

'args': ['mcp-server-time', '--local-timezone=Asia/Shanghai']

|

| 221 |

+

},

|

| 222 |

+

"fetch": {

|

| 223 |

+

"command": "uvx",

|

| 224 |

+

"args": ["mcp-server-fetch"]

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 225 |

}

|

| 226 |

}

|

| 227 |

+

},

|

| 228 |

+

'code_interpreter', # Built-in tools

|

| 229 |

]

|

| 230 |

|

| 231 |

+

# Define Agent

|

| 232 |

+

bot = Assistant(llm=llm_cfg, function_list=tools)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 233 |

|

| 234 |

+

# Streaming generation

|

| 235 |

+

messages = [{'role': 'user', 'content': 'https://qwenlm.github.io/blog/ Introduce the latest developments of Qwen'}]

|

| 236 |

+

for responses in bot.run(messages=messages):

|

| 237 |

+

pass

|

| 238 |

+

print(responses)

|

| 239 |

```

|

| 240 |

|

| 241 |

## Best Practices

|

|

|

|

| 243 |

To achieve optimal performance, we recommend the following settings:

|

| 244 |

|

| 245 |

1. **Sampling Parameters**:

|

| 246 |

+

- We suggest using `Temperature=0.7`, `TopP=0.8`, `TopK=20`, and `MinP=0`.

|

| 247 |

+

- For supported frameworks, you can adjust the `presence_penalty` parameter between 0 and 2 to reduce endless repetitions. However, using a higher value may occasionally result in language mixing and a slight decrease in model performance.

|

| 248 |

|

| 249 |

+

2. **Adequate Output Length**: We recommend using an output length of 16,384 tokens for most queries, which is adequate for instruct models.

|

| 250 |

|

| 251 |

+

3. **Standardize Output Format**: We recommend using prompts to standardize model outputs when benchmarking.

|

| 252 |

+

- **Math Problems**: Include "Please reason step by step, and put your final answer within \boxed{}." in the prompt.

|

| 253 |

+

- **Multiple-Choice Questions**: Add the following JSON structure to the prompt to standardize responses: "Please show your choice in the `answer` field with only the choice letter, e.g., `"answer": "C"`."

|

| 254 |

|

| 255 |

### Citation

|

| 256 |

|