updated README.md

Browse files

README.md

CHANGED

|

@@ -2,8 +2,82 @@

|

|

| 2 |

tags:

|

| 3 |

- model_hub_mixin

|

| 4 |

- pytorch_model_hub_mixin

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 5 |

---

|

| 6 |

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 2 |

tags:

|

| 3 |

- model_hub_mixin

|

| 4 |

- pytorch_model_hub_mixin

|

| 5 |

+

pipeline_tag: tabular-regression

|

| 6 |

+

library_name: pytorch

|

| 7 |

+

datasets:

|

| 8 |

+

- gvlassis/california_housing

|

| 9 |

+

metrics:

|

| 10 |

+

- rmse

|

| 11 |

---

|

| 12 |

|

| 13 |

+

# wide-and-deep-net-california-housing

|

| 14 |

+

|

| 15 |

+

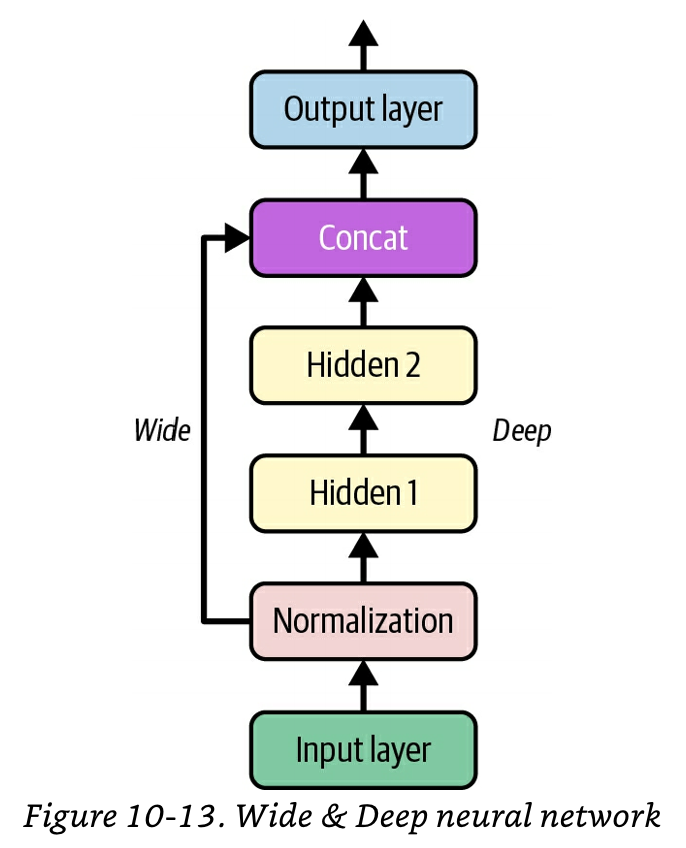

A wide & deep neural network trained on the California Housing dataset.

|

| 16 |

+

|

| 17 |

+

It takes eight inputs: `'MedInc'`, `'HouseAge'`, `'AveRooms'`, `'AveBedrms'`, `'Population'`, `'AveOccup'`, `'Latitude'` and `'Longitude'`. It predicts `'MedHouseVal'`.

|

| 18 |

+

|

| 19 |

+

It is a PyTorch adaptation of the TensorFlow model in Chapter 10 of Aurelien Geron's book 'Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow'.

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

Code: https://github.com/sambitmukherjee/handson-ml3-pytorch/blob/main/chapter10/wide_and_deep_net_california_housing.ipynb

|

| 24 |

+

|

| 25 |

+

Experiment tracking: https://wandb.ai/sadhaklal/wide-and-deep-net-california-housing

|

| 26 |

+

|

| 27 |

+

## Usage

|

| 28 |

+

|

| 29 |

+

```

|

| 30 |

+

from sklearn.datasets import fetch_california_housing

|

| 31 |

+

|

| 32 |

+

housing = fetch_california_housing(as_frame=True)

|

| 33 |

+

|

| 34 |

+

from sklearn.model_selection import train_test_split

|

| 35 |

+

|

| 36 |

+

X_train_full, X_test, y_train_full, y_test = train_test_split(housing['data'], housing['target'], test_size=0.25, random_state=42)

|

| 37 |

+

X_train, X_valid, y_train, y_valid = train_test_split(X_train_full, y_train_full, test_size=0.25, random_state=42)

|

| 38 |

+

|

| 39 |

+

X_means, X_stds = X_train.mean(axis=0), X_train.std(axis=0)

|

| 40 |

+

X_train = (X_train - X_means) / X_stds

|

| 41 |

+

X_valid = (X_valid - X_means) / X_stds

|

| 42 |

+

X_test = (X_test - X_means) / X_stds

|

| 43 |

+

|

| 44 |

+

import torch

|

| 45 |

+

|

| 46 |

+

device = torch.device("cpu")

|

| 47 |

+

|

| 48 |

+

import torch.nn as nn

|

| 49 |

+

from huggingface_hub import PyTorchModelHubMixin

|

| 50 |

+

|

| 51 |

+

class WideAndDeepNet(nn.Module, PyTorchModelHubMixin):

|

| 52 |

+

def __init__(self):

|

| 53 |

+

super().__init__()

|

| 54 |

+

self.hidden1 = nn.Linear(8, 30)

|

| 55 |

+

self.hidden2 = nn.Linear(30, 30)

|

| 56 |

+

self.output = nn.Linear(38, 1)

|

| 57 |

+

|

| 58 |

+

def forward(self, x):

|

| 59 |

+

act = torch.relu(self.hidden1(x))

|

| 60 |

+

act = torch.relu(self.hidden2(act))

|

| 61 |

+

concat = torch.cat([x, act], axis=1)

|

| 62 |

+

return self.output(concat)

|

| 63 |

+

|

| 64 |

+

model = WideAndDeepNet.from_pretrained("sadhaklal/wide-and-deep-net-california-housing")

|

| 65 |

+

model.to(device)

|

| 66 |

+

model.eval()

|

| 67 |

+

|

| 68 |

+

# Let's predict on 3 unseen examples from the test set:

|

| 69 |

+

print(f"Ground truth housing prices: {y_test.values[:3]}")

|

| 70 |

+

x_new = torch.tensor(X_test.values[:3], dtype=torch.float32)

|

| 71 |

+

x_new = x_new.to(device)

|

| 72 |

+

with torch.no_grad():

|

| 73 |

+

preds = model(x_new)

|

| 74 |

+

print(f"Predicted housing prices: {preds.squeeze()}")

|

| 75 |

+

```

|

| 76 |

+

|

| 77 |

+

## Metric

|

| 78 |

+

|

| 79 |

+

RMSE on the test set: 0.546

|

| 80 |

+

|

| 81 |

+

---

|

| 82 |

+

|

| 83 |

+

This model has been pushed to the Hub using the [PyTorchModelHubMixin](https://huggingface.co/docs/huggingface_hub/package_reference/mixins#huggingface_hub.PyTorchModelHubMixin) integration.

|