- Downloads last month

- 1,250

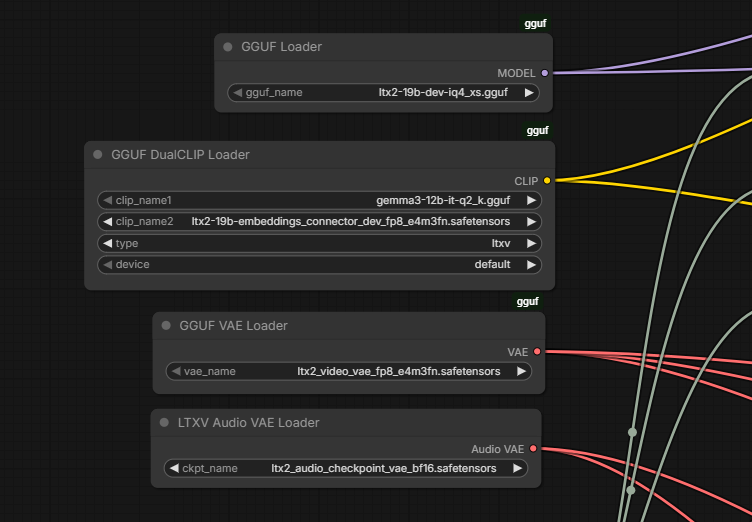

Hardware compatibility

Log In

to view the estimation

2-bit

4-bit

Inference Providers

NEW

This model isn't deployed by any Inference Provider.

🙋

Ask for provider support

Model tree for gguf-org/ltx2-gguf

Base model

Lightricks/LTX-2