TokSuite – mBERT

Model Summary

TokSuite–mBERT is part of TokSuite, a suite of language models designed to study the impact of tokenizer choice on language model behavior under controlled conditions.

This model uses the mBERT tokenizer and is otherwise identical to the other TokSuite models in architecture, training data, training budget, and initialization. The TokSuite setup ensures that any observed behavioral characteristics reflect properties of the tokenizer rather than differences in model scale, data composition, or optimization.

Tokenizer

- Tokenizer: mBERT

- Tokenization method: WordPiece

- Vocabulary size: 110,000

- Out-of-vocabulary handling: [UNK] token

- Language coverage: Multilingual

- Pretokenization source: BERT

Processing details:

- Numbers: Learned

- Contractions: Composed

- Unicode normalization: None

- Whitespace / boundary markers: Normalized

- Zerowidth chars: Normalized/Removed

Why mBERT?

mBERT was included in TokSuite to represent WordPiece-based multilingual tokenization with an explicit unknown-token mechanism. As described in the tokenizer selection rationale of the TokSuite paper, mBERT exemplifies a design point where tokenization relies on fixed subword vocabularies and explicit OOV handling rather than byte fallback.

Including mBERT enables TokSuite to study tokenizer behavior in settings where:

- WordPiece segmentation is used,

- unknown or rare strings are mapped to a dedicated

[UNK]token, - and multilingual coverage is achieved through a shared subword vocabulary.

This makes mBERT a representative example of WordPiece tokenization.

Model Architecture

- Architecture: Decoder-only Transformer (Lingua's Llama-3.2-1B configuration)

- Non-embedding parameters: ~1B

- Context length: 4096 tokens

- Framework: Meta Lingua

- Initialization: Shared super-vocabulary initialization across TokSuite models

The architecture and training setup are identical across all TokSuite models; only the tokenizer differs.

Training Data

The model was trained on a multilingual corpus totaling approximately 100B tokens, composed of:

- English: 40B tokens from FineWeb-Edu

- Multilingual: 60B tokens evenly distributed across:

- Chinese (ZH)

- Turkish (TR)

- Italian (IT)

- Farsi (FA)

You can find the pretraining dataset here: toksuite/toksuite_pretraining_data

All TokSuite models are trained using a fixed token budget, following standard practice in large-scale language model training.

Training Procedure

- Training steps: 100,000

- Sequence length: 4096 tokens

- Batch size: 256 sequences

- Optimizer: AdamW

- Peak learning rate: 1e-3

- Learning rate schedule: Cosine decay with 2,000 warm-up steps

- Weight decay: 0.1

Evaluation

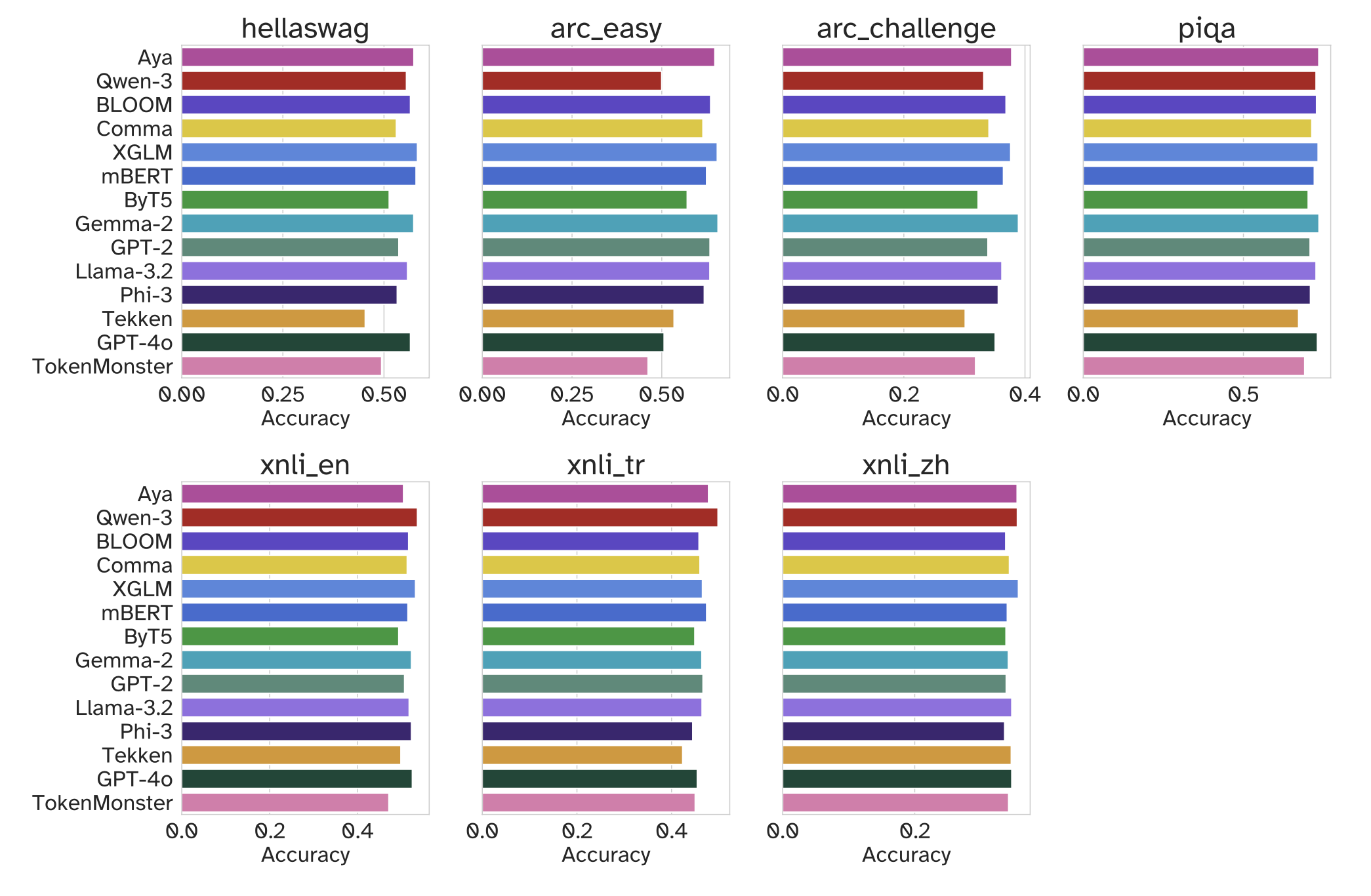

Canonical Benchmarks

The model was evaluated on standard base language model benchmarks:

- HellaSwag

- ARC

- PIQA

- XNLI

These evaluations verify that the model exhibits reasonable base language modeling behavior at its scale and training budget.

TokSuite Robustness Benchmark

TokSuite–mBERT is evaluated on the TokSuite robustness benchmark, which measures sensitivity to real-world text perturbations, including:

- orthographic and spelling variations,

- diacritics presence and absence,

- keyboard and input-method noise,

- Unicode formatting and homoglyphs,

- OCR and spacing artifacts,

- LaTeX and STEM-style formatting.

Tokenization Robustness under Multilingual Text Perturbations

Values represent relative performance drop, computed as (Acc_clean − Acc_perturbed) / Acc_clean, where lower values indicate greater robustness.

Perturbation types include:

- Input: non-native keyboard input and romanization

- Diacr.: optional diacritics

- Orth.& Gram.: orthographic and grammatical errors

- Morph: morphological variations including derivations, inflections, and contractions

- Noise: homoglyph substitutions, OCR artifacts, typos, and spacing errors

- LaTeX: LaTeX-style mathematical formatting

- STEM: scientific diagrams and notational conventions

- Unic.: Unicode styling characters

NEN denotes non-English inputs and EN denotes English inputs. The Avg column reports the average relative performance drop across all perturbation categories.

| Model | Input (NEN) | Diacr. (NEN) | Orth. & Gram. (EN) | Orth. & Gram. (NEN) | Morph (EN) | Morph (NEN) | Noise (EN) | Noise (NEN) | LaTeX (EN) | STEM (EN) | Unic. (EN) | Avg ↓ |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| TokenMonster | 0.23 | 0.33 | 0.08 | 0.01 | 0.23 | -0.07 | 0.10 | 0.18 | 0.21 | 0.10 | 0.51 | 0.17 |

| XGLM | 0.34 | 0.49 | 0.10 | 0.11 | 0.25 | 0.07 | 0.12 | 0.22 | 0.29 | 0.29 | 0.11 | 0.22 |

| BLOOM | 0.30 | 0.34 | 0.13 | 0.07 | 0.18 | 0.11 | 0.18 | 0.18 | 0.24 | 0.11 | 0.57 | 0.22 |

| ByT5 | 0.30 | 0.44 | 0.04 | 0.06 | 0.27 | 0.04 | 0.14 | 0.18 | 0.17 | 0.29 | 0.53 | 0.22 |

| Comma | 0.28 | 0.43 | 0.05 | 0.07 | 0.18 | 0.00 | 0.11 | 0.20 | 0.23 | 0.29 | 0.61 | 0.22 |

| mBERT | 0.33 | 0.44 | 0.11 | 0.11 | 0.23 | 0.06 | 0.18 | 0.22 | 0.14 | 0.22 | 0.61 | 0.24 |

| GPT-4o | 0.30 | 0.51 | 0.08 | 0.05 | 0.21 | 0.05 | 0.16 | 0.19 | 0.24 | 0.33 | 0.55 | 0.24 |

| GPT-2 | 0.34 | 0.46 | 0.07 | 0.10 | 0.25 | 0.06 | 0.14 | 0.21 | 0.24 | 0.35 | 0.53 | 0.25 |

| Phi-3 | 0.33 | 0.46 | 0.16 | 0.09 | 0.27 | 0.08 | 0.17 | 0.21 | 0.24 | 0.22 | 0.55 | 0.25 |

| Gemma-2 | 0.32 | 0.42 | 0.14 | 0.15 | 0.24 | 0.03 | 0.16 | 0.25 | 0.22 | 0.36 | 0.57 | 0.26 |

| Qwen-3 | 0.36 | 0.42 | 0.14 | 0.11 | 0.25 | 0.06 | 0.16 | 0.23 | 0.26 | 0.29 | 0.57 | 0.26 |

| Llama-3.2 | 0.33 | 0.55 | 0.11 | 0.10 | 0.25 | 0.08 | 0.15 | 0.24 | 0.17 | 0.30 | 0.59 | 0.26 |

| Aya | 0.31 | 0.46 | 0.14 | 0.10 | 0.22 | 0.03 | 0.19 | 0.25 | 0.21 | 0.38 | 0.58 | 0.26 |

| Tekken | 0.33 | 0.47 | 0.18 | 0.03 | 0.31 | 0.10 | 0.14 | 0.21 | 0.27 | 0.43 | 0.54 | 0.27 |

| Avg | 0.31 | 0.44 | 0.11 | 0.08 | 0.24 | 0.04 | 0.15 | 0.21 | 0.22 | 0.28 | 0.53 | 0.24 |

Intended Use

This model is intended for:

- research on tokenization and robustness,

- multilingual NLP analysis,

- controlled ablation studies,

- benchmarking tokenizer behavior under noise.

It is not instruction-tuned, aligned, or optimized for deployment.

Limitations

- Trained on a limited set of five languages.

- Not optimized for instruction following or dialogue.

- Fixed token budget constraints exposure to raw text depending on tokenization efficiency.

- Intended strictly for research purposes.

Ethical Considerations

TokSuite models are released to support scientific investigation of tokenization effects.

They may reflect biases present in large-scale web data and should not be used in high-stakes or user-facing applications without additional safeguards.

Citation

If you use this model, please cite:

@article{toksuite2025,

title={TokSuite: Measuring the Impact of Tokenizer Choice on Language Model Behavior},

author={Altıntaş, Gul Sena and Ehghaghi, Malikeh and Lester, Brian and Liu, Fengyuan and Zhao, Wanru and Ciccone, Marco and Raffel, Colin},

year={2025},

arxiv={https://arxiv.org/abs/2512.20757},

}

- Downloads last month

- 57